Building Enterprise-Grade Real-Time Alert Systems: From Equipment Failures to Fraud Detection

Transform your business operations with intelligent, automated alerting that keeps you ahead of critical issues.

In today's fast-paced digital landscape, the difference between proactive response and reactive damage control can make or break your business. After architecting and implementing numerous real-time alert systems across various industries, I'm excited to share how a well-designed 3-tier architecture can power multiple critical use cases that every enterprise needs.

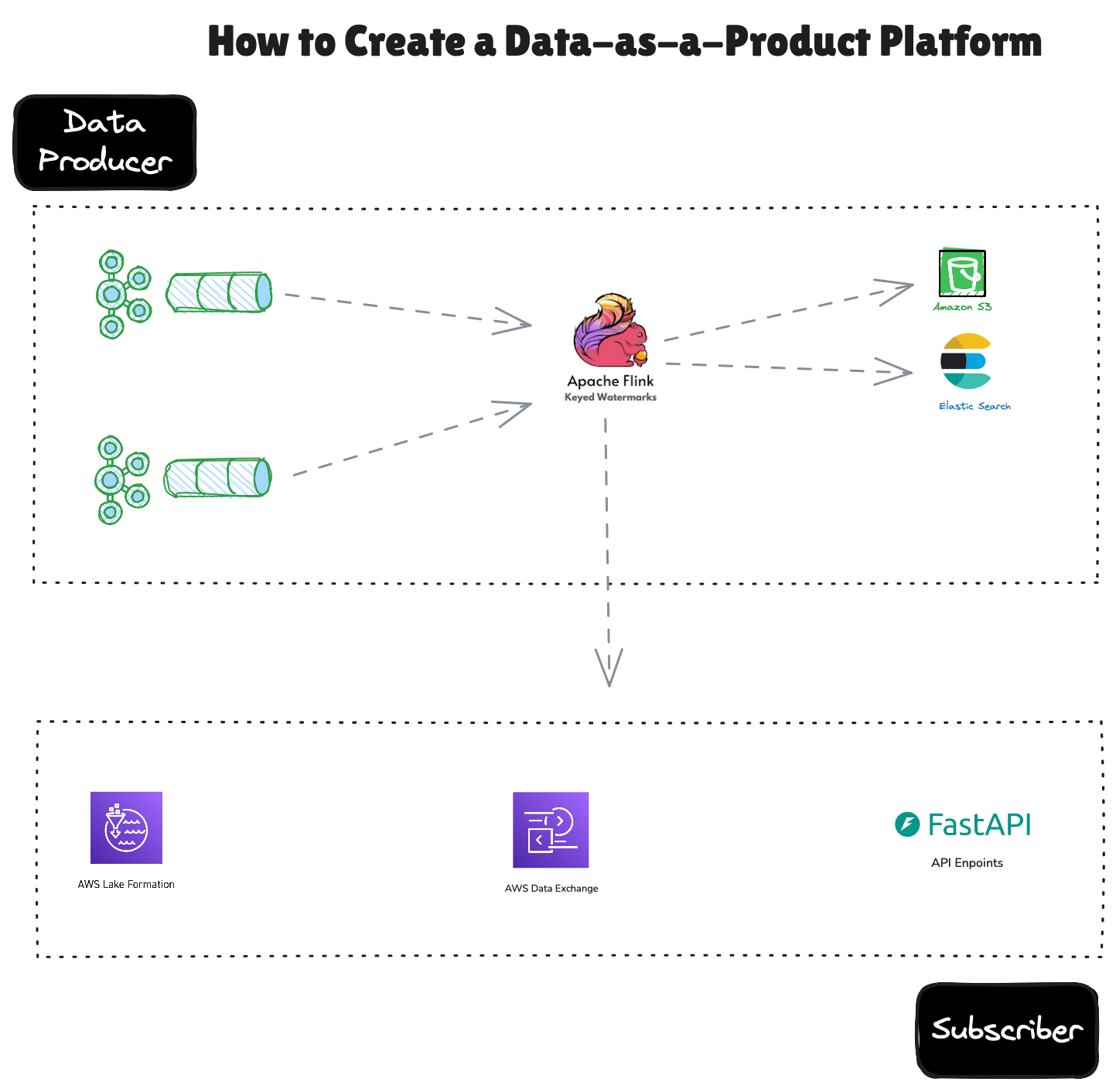

From predictive maintenance to fraud detection, it all runs on a proven 3-tier architecture. Scalable infrastructure handles the plumbing — the real magic happens inside the “black box” of stream processing.

After building real-time alert systems across industries, I've seen how the right architecture transforms reactive operations into proactive excellence. Here's what's possible:

The Architecture

Infrastructure: Kafka + AWS (S3, DynamoDB) + PostgreSQL + Elasticsearch

DynamoDb - Hot Path + Rules Engine

Postgres - Context

Elasticsearch - Analytics

S3 - Cold Path

Processing:

Apache Flink for real-time stream processing

Alert Processing (Rules Engine + Context)

Application:

FastAPI + Intelligent Alert Consumer

Five Game-Changing Use Cases

Maintenance Alerts

Monitor equipment sensors → Prevent $50K/hour downtime Result: 73% reduction in unplanned downtime.

Equipment Malfunction Detection

Process thousands of telemetry points → Instant failure response Result: 99.7% uptime, 18 major incidents prevented

Predictive Maintenance

ML-powered predictions → Optimal maintenance timing Result: 23% longer equipment life.

Network Threat Alerts

Real-time traffic analysis → Sub-minute threat response Result: 94% threats mitigated in 60 seconds.

Fraud Detection

Sub-100ms transaction analysis → Real-time fraud blocking Result: 99.2% fraud caught, 67% fewer false positives.

Smart Alert Consumer

Multi-Channel Communications: Slack alerts with rich context Status page updates Email/SMS notifications

PagerDuty integration Custom webhooks

Smart Processing:

Contextual enrichment with historical data

De-duplication to prevent alert fatigue

Automatic escalation rules

Complete audit trails

Why This Works

Scale: Millions of events per second

Speed: Sub-second processing

Flexibility: Rules-as-data, no deployments

Integration: Works with existing systems

Getting Started

Start with one use case, scale gradually. The modular design adapts to your specific challenges.

hashtag#RealTimeAlerts hashtag#StreamProcessing hashtag#ApacheFlink hashtag#AWS hashtag#DigitalTransformation hashtag#PredictiveMaintenance hashtag#FraudDetection hashtag#Architecture

Written by Data Engineering

Senior engineer with expertise in data engineering. Passionate about building scalable systems and sharing knowledge with the engineering community.

Related Articles

Continue reading about data engineering

An AI driven persistent Query Intelligence for Postgres

Still Guessing Which Query Killed Performance?

Building a 90-Second Credit Card Approval System: A Real-Time Architecture Case Study

How we designed a production-grade, event-driven approval pipeline that processes credit applications in under 90 seconds while maintaining 99.9% reliability

Built an architecture for instant approvals backend

Designed an architecture that makes intelligent decisions in milliseconds - perfect for fraud detection, approvals, and access control.

Stay Ahead of the Curve

Get weekly insights on data engineering, AI, and cloud architecture

Join 1,000+ senior engineers who trust our technical content